The word “intelligence”, roots back to the Latin word “intelligentia” or “intellēctus”, derived from the verb “intelligere” which means to comprehend or to perceive (Hobbes & Molesworth, 1841). However, a simple and unified definition of the word intelligence as it’s being used today has proven to be impossible to agree upon. Following are a few definitions driven from (Legg & Hutter, 2007):

- “The capacity to acquire and apply knowledge.” The American Heritage Dictionary, fourth edition, 2000

- “The ability to learn, understand and make judgments or have opinions that are based on reason” Cambridge Advanced Learner’s Dictionary, 2006

- “. . . that facet of mind underlying our capacity to think, to solve novel problems, to reason and to have knowledge of the world.” M. Anderson

- “Intelligence is what is measured by intelligence tests.” E. Boring

- “An intelligence is the ability to solve problems, or to create products, that are valued within one or more cultural settings.” H. Gardner

- “The ability to carry on abstract thinking.” L. M. Terman

Despite this challenge, the changes and improvements in our intelligence have not stopped. Factors impacting how human intelligence is being transformed continue to change the way we perceive and interact with the world. After extensive research about natural and artificial intelligence, reading books and published papers, here are four factors that I have identified which are playing key roles in how our intelligence is transforming.

- Intelligence is naturally evolving

“In creating the human brain, evolution has widely overshot the mark”

— Arthur Koestler

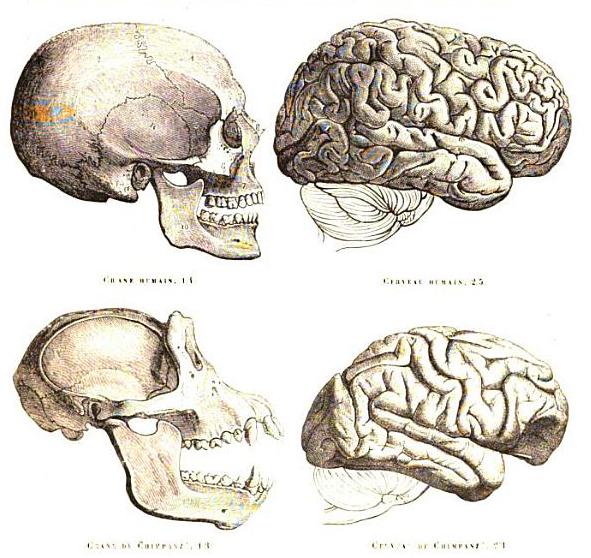

Looking at image 1, you can see the change in the physical shape of our brain over time. After thousands of years of evolutions, we have developed large foreheads compared to that of our ancestors or other mammals. This forehead has allowed space for a large amount of what is called ‘neocortex’ to be stored in our brains. The neocortex is the part of the brain in our frontal lobe which allows us to do our thinking in what is called a hierarchical and exponential manner. Such ability has allowed us to survive events that some other species like some reptiles did not. Such a high quantity of neocortex in humans has allowed us to create things like art, science, mathematics etc. This evolution has also given us the ability to think about abstract concepts and hypothetical scenarios, hence allowing us to invent concepts that did not exist (e.g. technology!) (Kurzweil, 2013).

On the other hand, since the industrial revolution, the nature of the jobs in the industry and has created jobs that demand a higher level of cognitive agility as opposed to physical strength. According to Flynn (2013), in 1900, only 3% of Americans had jobs that were “cognitively demanding” (e.g. doctors, lawyer, teachers), that number has increased to 35% as of 2013. Such demand in jobs has meant that people have moved away from concretely thinking about the world and have developed the ability to think about abstract concepts. Being more cognitively agile means we can think of concepts that don’t exist right in front of us. We can make assumptions and answer “what if” questions even about things we have never experienced (e.g. what if we lived on Mars or what if we could fly?). Moving away from a concrete to an abstract world has enabled us to do things like programming, understanding politics, having moral conversations etc. Such a change in the industry hand in hand with the natural evolution has resulted in improving human cognitive abilities to an extent that recent research shows our IQ levels are normally higher than that of our grandparents (Flynn, 2013).

- Intelligence is being replicated

By now most if not all of you who are reading this article would have heard or read about artificial intelligence, its threats and its promises. As the name suggests, artificial intelligence or machine intelligence is intelligence demonstrated by machine, contrary to natural intelligence (Wikipedia, 2020). The term was coined back in 1956 and most of the early research especially in the 1980s and 1990s was focused on replicating how the human brain functions into machines (Greene, 2019).

Most of the development has also been trying to replicate human intelligent behaviour and then improve it to make it better than humans. The games of Chess, Go and Jeopardy are few famous examples of how machines were programmed to replicate human decision-making process and then improved it to make it better than the best human players they could find at the time. Further improvements in AI, allowed machines to do more human-like tasks. Image recognition allowed machines to “see”, speech recognition has enabled them to “listen” / “hear”, text to speech has helped them “talk”, sensors and codes and cameras have taught them how to navigate and robotics has allowed them to walk, run, jump or show off backflips.

Even certain concepts in AI are defined with human-based thresholds e.g. the current state of AI is simply called artificial intelligence or artificial specific intelligence as our current programs are only capable of doing one task well. However, the concept of AGI (or Artificial General Intelligence) is defined as human-level AI (i.e. an AI that like humans can multitask and be good at a lot more than just one thing). Lastly, the concept of ASI (Artificial Super Intelligence) is an AI that is more intelligent than humans.

The future of artificial intelligence and how it will compete with natural intelligence is beyond the scope of this article. However, such replication of human intelligence has created what is forecasted to be an industry that increases our global economy by 16 trillion USD by 2030 (Chainey, 2017). An industry which has caused both excitement and fear (Samiei, 2019).

- Intelligence is being enhanced

In 1960 the term Cyborg (short for Cybernetic Organism) was coined by Manfred Clynes and Nathan S. Kline (Clynes & Kline, 1995). It is used to define an organism that has restored or enhanced abilities as a result of an artificial or technological implant which provides feedback to the brain (Carvalko, 2012).

There have been several attempts in the world to create cyborgs. However, the first person with an implanted antenna in his skull is Neil Harbisson. He was born colour blind and now with the help of scientist friends, he has invented and embedded an antenna into his skull that translates colour into sound frequencies and hence allows him to hear colour instead of seeing it. He claims that when he started dreaming in colour, he realised that the software and his brain had merged (Harbisson, 2012).

Such improvement has meant that Harbisson can now hear a range of colours that we cannot see (e.g. ultraviolet), hence turning a disability to an ability beyond the norm of others. Harbisson has established The Cyborg Foundation in Barcelona with the mission to help everyone to be disability-free (The Cyborg Foundation, 2020). While such a concept might be initially adopted by people with disabilities, the concept of humans to be able to design themselves will see an uptake by the rest of the population (Samiei, 2019).

Another stream of research which works on enhancing intelligence is focused on eliminating the limitations that our brains have. These include physical limitations (such as the size of our skull) and functional limitations (such as inefficient analogue data processing methods of the brain). One idea to overcome such limitations is a cloud-based neocortex (the portion of our brain that is responsible for higher functions such as language, spatial reasoning, generating motor commands, etc.), proposed by Kurzweil,(2014).

Kurzweil believes that over time this synthetic neocortex will overtake our biological one. What was discussed earlier in the case of Harbission dreaming in colour and the software and his brain had become one, proves Kurzweil’s point. Over time synthetic neocortices will provide larger brain capacity, allowing us to earn more at a shorter time. Such concepts once proven, will see a rapid uptake and will change the landscape of how we learn. I have proposed what this future looks like in my thesis ‘On the danger of artificial intelligence’1.

- Intelligence is adapting

Technology is anything that was not there when you were born.

— Alan Kay

I recall a story from my mum about my great grandmother. Back in the days when TV first made its way to houses in Iran, the very first thing my grandfather put on was the news. My great grandmother then quickly wore her scarf on her head and started greeting the news anchor on TV.

This story might seem funny to us reading it now, but have you imagined what stories the next generation kids will be telling their grandkids about how we behaved with a certain technology? How an entire nation got together to watch a game beating the best Go player? Or how scared of this new technology some of us were?

When talking about how we adapt to new situations, I like referencing life before the industrial revolution. When humans faced a lot more physical challenges daily. Activities like, getting water from a well, tilling the soil with the help of animals, hand-making their garments, walking long distances etc. However, after the industrial revolution cheap and convenient devices replaced a lot of manual labour, a big portion of outdoor labour-intensive work was replaced by indoor office jobs and naturally grown food was replaced by processed food including a large amount of salt and sugar. These changes have increased obesity and in turn higher rate of disease like diabetes heart disease (Rafferty, 2020).

However, if we don’t deliberately study these changes, chances are we don’t even notice that such a high rate of disease is not normal. This is because we haven’t seen a different world since the day we were born. So, we accept that whatever is going on around us is “normal”.

A similar concept applies to future generations. They are born with a life hugely dependent on technology. However, the difference between the current technological improvement and the previous industrial revolution is that we are now outsourcing our brain capability as opposed to our physical capability. We are outsourcing the part of our body that directly impacts our decision making. The implications of such outsourcing are far more fundamental than having weaker body muscles. For example, a study shows that the hippocampus (part of the brain responsible for special relationships), physically shrinks in people who rely on GPS for navigating (O’Connor, 2019) (Jennings, 2013).

Hence, as the generation that is putting in place the foundation for the future, I believe, it is our responsibility to communicate the impact of our daily decisions, ethics and morals of the meaning of being human to the next generation.

References

Carvalko, J. (2012). The Techno-Human Shell: A Jump in the Evolutionary Gap. Sunbury Press.

Chainey, R. (2017, Jun 27). The global economy will be $16 trillion bigger by 2030 thanks to AI. Retrieved from https://www.weforum.org: https://www.weforum.org/agenda/2017/06/the-global-economy-will-be-14-bigger-in-2030-because-of-ai/

Clynes, M. E., & Kline, N. S. (1995). Cyborgs and space. The cyborg handbook, pp. 29–34.

Flynn, J. (2013). Why our IQ levels are higher than our grandparents’ | James Flynn. Retrieved from https://www.youtube.com: https://www.youtube.com/watch?v=9vpqilhW9uI

Greene, T. (2019). Researchers developed algorithms that mimic the human brain (and the results don’t suck). Retrieved from https://thenextweb.com: https://thenextweb.com/artificial-intelligence/2019/04/05/researchers-developed-algorithms-that-mimic-the-human-brain-and-the-results-dont-suck/

Harbisson, N. (2012, June). I listen to colour. Retrieved from www.YouTube.com: https://www.ted.com: https://www.ted.com/talks/neil_harbisson_i_listen_to_color#t-33336

Hobbes, T., & Molesworth, W. (1841). Opera philosophica quae latine scripsit omnia: in unum corpus nunc primum collecta studio et labore Gulielmi Molesworth (Vol. 3). J. Bohn.

Jennings, K. (2013, April 6). Ken Jennings: Watson, Jeopardy and me, the obsolete know-it-all. Retrieved from www.YouTube.com: https://www.youtube.com/watch?v=b2M-SeKey4o

Kurzweil, R. (2013). How to create a mind: The secret of human thought revealed. Penguin.

Kurzweil, R. (2014). The singularity is near. In Ethics and emerging technologies. London: Palgrave Macmillan.

Legg, S., & Hutter, M. (2007). A collection of definitions of intelligence. Frontiers in Artificial Intelligence and applications, 157, 17.

Li, J. (2015). The End of Human Brain Evolution? Retrieved from https://blogs.ubc.ca/: https://blogs.ubc.ca/joyli/2015/10/19/the-end-of-human-brain-evolution/

O’Connor, M. (2019, June 6). Opinion: Here’s what gets lost when we rely on GPS. Retrieved from https://www.heraldnews.com/: https://www.heraldnews.com/opinion/20190606/opinion-heres-what-gets-lost-when-we-rely-on-gps

Rafferty, J. P. (2020). The Rise of the Machines: Pros and Cons of the Industrial Revolution. Retrieved from https://www.britannica.com/: https://www.britannica.com/story/the-rise-of-the-machines-pros-and-cons-of-the-industrial-revolution

Samiei, S. (2019). Om the danger of Artificial Intelligence. Auckland University of Technology.

The Cyborg Foundation. (2020). Retrieved from https://www.cyborgfoundation.com/

Wikipedia. (2020). Artificial_intelligence. Retrieved from https://en.wikipedia.org: https://en.wikipedia.org/wiki/Artificial_intelligence